Interpolation in adaptive sub-sampling?

category: general [glöplog]

when I said "reflective spheres" I was referring more generally to the "geometric primitives" with the classic "Whitted raytracing" method, not to this or that intro in particular. For example, I will never believe in the RealStorm approach of only raytracing primitives and not polymeshes (strange nobody mentioned this engine so far in the thread btw). Anyway, my point was that as soon as you add textures, and a bounding volume hierarchy or kdtree or bih to do (real) raytracing, the incoherence of the screen partitioning will kill the performance gains you see for the scenes you are testing. Nothing wrong with doing it tho, we are not talibans, I was just seconding Ector who I think had a point.

dude

Quote:

yeah because that was the best we could do then, but we know when the horse is dead for good :).

Oh no, Decihper killed the sphere hype! In 2009!

Oh and btw, it's ~62FPS because of vsync. Doesn't anyone know what's behind a renderer when it gets stuck at ~60FPS?

I know what's behind it when it gets stuck at 60, not 62 :)

Actually, since you bothered to show the framerate I assumed it was the real unthrottled one. So, what IS your real framerate? You didn't even say so far :)

Actually, since you bothered to show the framerate I assumed it was the real unthrottled one. So, what IS your real framerate? You didn't even say so far :)

Around ~159, but still it's unoptimized code. My CPU isn't fully loaded, the threads do wait now and then, cpu load is at ~80%.

Quote:

yeah because that was the best we could do then, but we know when the horse is dead for good :).

First of all, like Gargaj said earlier: first you release a 4K that is exactly what you tell others to "get over", a month (or something like that) later you get off telling others that they should "broaden their horizon".

If anything this turn to raytraced graphics that has been going on for quite a while now. Therefore it's not suprising that more and more are trying it and subsequently ask questions, it's becoming mainstream. And maybe spheres and such are a logical primitive to start with - Jacco (author of Arauna) has been wrestling with this stuff for over 10 years, so can beginners be excused for not diving into the same depths instantly? I guess your own production kind of proves that point perfectly.

I suggest you get off your high horse until you trace a few spheres yourself at a less crappy framerate.

Iq:

Well... yes... My new kd-tree raytracer is much faster than the sphere example, but that example is brute-force, so I wanted to show it to compare with the samples I saw here.

I think it is not enough to say "with that cpu you can make that or those" without a working example or to say "but Arauma is faster" blah blah, because Arauma is not brute force, so we are comparing melons to apples.

And, 70 moving and overlapping spheres in brute force continues being a difficult task for current computers. With the reflection it is near 100 million sphere intersections per image, multiply it by the framerate you desire and you have a little problem.

So, I believe any data structure such as BIH, kd-tree, grids or whatever is the way to go in realtime raytracing, but by other hand it is good to have simpler references for people who are starting with their raytracers. In this case, it is good to see how good is your sphere intersection routine.

And then, about the subsampling thing, it is faster yet, mostly for the first ray, and only if your objects are bigger than a pixel, and at the cost of possible glitches. It was faster 10 years ago, and it will continue being faster the same way today in cpu software rendering.

Well... yes... My new kd-tree raytracer is much faster than the sphere example, but that example is brute-force, so I wanted to show it to compare with the samples I saw here.

I think it is not enough to say "with that cpu you can make that or those" without a working example or to say "but Arauma is faster" blah blah, because Arauma is not brute force, so we are comparing melons to apples.

And, 70 moving and overlapping spheres in brute force continues being a difficult task for current computers. With the reflection it is near 100 million sphere intersections per image, multiply it by the framerate you desire and you have a little problem.

So, I believe any data structure such as BIH, kd-tree, grids or whatever is the way to go in realtime raytracing, but by other hand it is good to have simpler references for people who are starting with their raytracers. In this case, it is good to see how good is your sphere intersection routine.

And then, about the subsampling thing, it is faster yet, mostly for the first ray, and only if your objects are bigger than a pixel, and at the cost of possible glitches. It was faster 10 years ago, and it will continue being faster the same way today in cpu software rendering.

but even if your objects are bigger than a pixel you could still miss some obects (i.e. a 7x7 (projected) sphere inside a 8x8 subsampling pattern).. this also shows in animations (take the FAN demos, where this happens 'all' the time)..

also from my own experiences using RT on CUDA, GPUs suffer from additional incoherencies when subsampling (or even adaptive sampling) as soon as you have real materials (multiple textures and lights, etc) or a hierarchy to traverse, IMHO its always faster for moderate+ complexity scenes to simply trace a ray instead of interpolating on GPUs or anything that has wide SIMD/T..

also from my own experiences using RT on CUDA, GPUs suffer from additional incoherencies when subsampling (or even adaptive sampling) as soon as you have real materials (multiple textures and lights, etc) or a hierarchy to traverse, IMHO its always faster for moderate+ complexity scenes to simply trace a ray instead of interpolating on GPUs or anything that has wide SIMD/T..

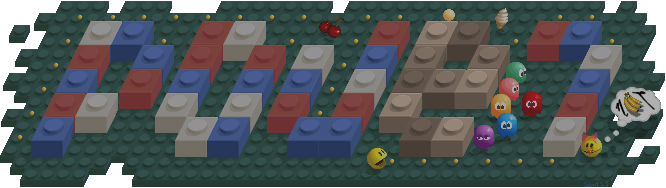

xTr1m, we want this:

:)

:)

Quote:

.[...] because Arauma is not brute force, so we are comparing melons to apples[...]

Of course if not brute force! I mean, what would be the point of making things slow if you can make them fast? I mean, this is the demoscene, we try to optimize, not to unoptimize... so why would you ever be proud of making things slow in bruteforce when you can do it fast?

Quote:

And, 70 moving and overlapping spheres in brute force continues being a difficult task for current computers.

Sure making it in bruteforce is a difficuly task. Making it in a gameboy is even harder. So.... again, me doesn't compute.

iq: compared to you screenshot machine, no one computes enough :P

i know this sucks and i allready should know this, but its bugging me like hell.

you see that wrap-problem?

it seems like its when i interpolate the u-v coordinates in the interpolator function that causes this. this only happen when i change the coords to chars or unsigned chars. it does not happen when i use unsigned shorts. i use floats when intersecting a plane. i havent tried this on a sphere because i havent implemented uv-coordinates for them yet. but i am sure that its not the uv-coordinates themselves. this happens when i interpolate, ive even tried to cast chars to shorts without luck before interpolate. can this have something to do with offset problems or the lerp function? ive even tried to interpolate fixedpoint numbers, but that gets worse! please help!

you see that wrap-problem?

it seems like its when i interpolate the u-v coordinates in the interpolator function that causes this. this only happen when i change the coords to chars or unsigned chars. it does not happen when i use unsigned shorts. i use floats when intersecting a plane. i havent tried this on a sphere because i havent implemented uv-coordinates for them yet. but i am sure that its not the uv-coordinates themselves. this happens when i interpolate, ive even tried to cast chars to shorts without luck before interpolate. can this have something to do with offset problems or the lerp function? ive even tried to interpolate fixedpoint numbers, but that gets worse! please help!

i am thinking too see if an extra bit can solve this issue. like using 9 bits instead of 8 per coord. but i have no clue what to do, ive tried almost everything now.

not per coord, per component i mean.

one extra bit was not enough. i had to add 4. so that means my uvcoords takes 16 bits in total. i just gonna have to use it like this it seems.

uhm. not 16. i meant 24 bits. damn im tired :S

you could try to have a look at the values of your UV coords, so that you can multiply to some constant to have the UV values "optimized" to your 16 or 24 bits :)

anyway if it works with shorts but not with chars, then it's probably just too big (value > 255), so you might want to divide, and lose some precision.

btw it's very inspiring to see you work on and document a realtime raytracer from scratch, even if it's only about spheres ;)

anyway if it works with shorts but not with chars, then it's probably just too big (value > 255), so you might want to divide, and lose some precision.

btw it's very inspiring to see you work on and document a realtime raytracer from scratch, even if it's only about spheres ;)

BarZoule: hey, yeah. its quite rewarding to work on this. i could have used other primitives than a sphere but primitive-intersections is not my priority at the moment. its so easy to see if the reflections, refraction and other properties work with this primitive anyway :)

i might wonder if the precision is too low allready. but its strange that it only happens at the edge of the texture. wrapping (0xff) dont work either. its strange. thanks for the tip anyway. i'll have another look at the lerp function.

i might wonder if the precision is too low allready. but its strange that it only happens at the edge of the texture. wrapping (0xff) dont work either. its strange. thanks for the tip anyway. i'll have another look at the lerp function.

rydi, it is not strange at all... You are using cyclic textures, so, in one point you get texture coordinate 250 and in the other 3 (259%256), you will interpolate backwards, creating that effect.

I suggest to interpolate the UV of the plane, not the texture one. So, you calc in the interpolation the texture position and the modulo, not before interpolation.

And, of course, if you use int32 instead of char8, you will move the problem very far, but with int16 you will see it every 256 repetitions of your 256x256 texture

I suggest to interpolate the UV of the plane, not the texture one. So, you calc in the interpolation the texture position and the modulo, not before interpolation.

And, of course, if you use int32 instead of char8, you will move the problem very far, but with int16 you will see it every 256 repetitions of your 256x256 texture

interesting ,the talk about spheres. the major reason i was experimenting with raytracing was to handle things that couldnt be done efficiently with geometry, like e.g. tracing 5-10,000 spheres as metaballs for visualising the output from some fluid dynamics. a case where everything is moving, so precomputation isnt too useful, and where the function has to be evaluated along the ray - early outs are less easy and accurate.

i had that going on 4 cores at between 30-60 fps at 720p, but the metaball function wasnt soft enough and 10,000 wasnt enough - so i ditched the whole thing for this and came up with gpu raytracer hybrid approach for metaballs which could handle an almost unlimited amount (50-100,000). it looked quite smooth, but i improved it after frameranger to make it smoother and softer, and now it looks good i think.

i had that going on 4 cores at between 30-60 fps at 720p, but the metaball function wasnt soft enough and 10,000 wasnt enough - so i ditched the whole thing for this and came up with gpu raytracer hybrid approach for metaballs which could handle an almost unlimited amount (50-100,000). it looked quite smooth, but i improved it after frameranger to make it smoother and softer, and now it looks good i think.

(i.e. if you want a challenge, get off spheres and simple distance functions and try metaballs controlled by a particle sim or fluid dynamics)

smash: on what hardware requirements?

the yeti: frameranger works on 8800 up..

(but it could really use a 280 so we could up the quality)