AI is forcing the hand of the Demoscene.

category: general [glöplog]

Quote:

I was talking about projects this: linky

The next logical step after drones.

4gentE: I like your examples, but to me, the 18 wheel truck is to me more unpredictable than chatbot. The truck has many points of failure, complex electronics and software, complex hardware, and what's more important, the environment it operates in is highly unpredictable. Say, the brakes may suddenly stop working and you are toast. The inference of neural network model, on the other hand, is completely deterministic - it always produces the same output for the same input. Of course, if it's a probabilistic model, you can interpret output probabilities however you want and you can in theory employ some random number generators. But you can see the difference. In chatbot execution environment is completely stable, there are no external factors, there is only single, very controlled stream of text input. How is this unpredictable and non-deterministic to you?

About dangers: sure, going this train of thought, you can hurt yourself or others even with a kitchen knife, if you are an idiot.

About dangers: sure, going this train of thought, you can hurt yourself or others even with a kitchen knife, if you are an idiot.

Or maybe better comparsion: a book can hurt you as well. And turns out many people got "hurt" by books - say they mistunderstood Nietzsche philosophy or maybe even understood it correctly, but applied ;P Does it mean censoring and burning books makes sense?

PS to be clear, I'm not using LLMs myself, because they produce non-sense, as you say, but I don't see how it is dangerous. Even going on pouet you will read a lot of non-sense. Just don't go on pouet and don't use chatbots, problem solved.

Admittedly, it's not the greatest observation that as AI, computers, or even mechanical machinery off-load a burden on humans and become more adept (or less prone to needing human intervention such as a prompt or selection), we will like them more.

The danger posed is rather that we will like the perfection of the generated or handed to us vastly more than that invented by humans rather than selected from that already invented by humans, and that presented virtually instead of that crafted by the human intellect and fingers and opposing thumbs, that are the product of the only planet we know that has produced life in the Universe. The danger is that no life can match the perfection of all life and ideas combined, and that anyone who tries will give up instantly, and we will be forced to accept the perfect remixes of non-life.

If we replace ourselves, then, with the non-physical that we created having access to only the intellectual and life product achieved hitherto, it may serves ourselves right for not having achieved a high enough intellectual level to at large resist it, but this will not help humans, or the possible new inventions and physical crafting achievements of physical being that a planet might produce again, in some distant place and time of which no human or AI has proof, and of which AI will never be able to provide proof without physical actors such as living beings.

Our love for machines notwithstanding, they are to be kept as tools to advance humans, lest we become their servants.

The Demoscene - similarly to Art - has a place in history, if and only if the movement appreciates that which affords credit of the work of a human and resists the machine-generated. If not, both will be quickly drowned out by the adulation of search results for that already achieved by humans, and in half a generation, there will be no human alive capable of overcoming that machine-racism favoring remixes over original work by humans.

It may have already happened. There is only resistance. That resistance is art.

The danger posed is rather that we will like the perfection of the generated or handed to us vastly more than that invented by humans rather than selected from that already invented by humans, and that presented virtually instead of that crafted by the human intellect and fingers and opposing thumbs, that are the product of the only planet we know that has produced life in the Universe. The danger is that no life can match the perfection of all life and ideas combined, and that anyone who tries will give up instantly, and we will be forced to accept the perfect remixes of non-life.

If we replace ourselves, then, with the non-physical that we created having access to only the intellectual and life product achieved hitherto, it may serves ourselves right for not having achieved a high enough intellectual level to at large resist it, but this will not help humans, or the possible new inventions and physical crafting achievements of physical being that a planet might produce again, in some distant place and time of which no human or AI has proof, and of which AI will never be able to provide proof without physical actors such as living beings.

Our love for machines notwithstanding, they are to be kept as tools to advance humans, lest we become their servants.

The Demoscene - similarly to Art - has a place in history, if and only if the movement appreciates that which affords credit of the work of a human and resists the machine-generated. If not, both will be quickly drowned out by the adulation of search results for that already achieved by humans, and in half a generation, there will be no human alive capable of overcoming that machine-racism favoring remixes over original work by humans.

It may have already happened. There is only resistance. That resistance is art.

@tomkh

Danger?

Let’s go short term.

Read Gargaj’s post about it.

This thing (LLM) can and will be used for dispersing misinformation on a whole new level and scale. These days there is a shitload of semi-literate people that believe a whole range of wild lies. GPT is a great tool for manipulating them.

Our political system is such that it doesn’t really matter what you personally know or believe, only what majority believes is what matters. It’s a numbers game. And that influences everything. GPT doesn’t need to fool you personally to diminish the quality of your life or put you in danger.

(1) The text GPT outputs (however dumb it may sound to you or me) is unfortunately legit enough to fool a fair amount of ‘unwashed masses’ of today. A lot of them already believe outlandish texts of far lesser quality. This thing will be used to further blur the already smeared line between fact and fiction. The number of people succumbing to lies will surely increase.

(2) The capacity of semi-literate people who have a personal agenda to disperse their braindead conspiracy theories will dramatically increase. We are talking about people who are unable to put 5 gramatically accurate sentences together. Now, the GPT will do that for them. This will furtherly lower the bar (entry fee) for the production of idiocy and lies.

(3) Let’s not even get into ‘bad actors’ territory, orchestrated misinformation campaigns, and (new and improved) ‘Internet Research Agency v2.0’.

Think about whole fake websites (I mentioned this before), fake websites with fake forums with fake human interactions created by GPT en masse to support the lies, to serve as ‘proof’.

Surely, you must see the danger.

I’m not saying here any AI tech is intrinsically more dangerous than any other (non AI) tech (although this can be open for discussion) - here I’m saying that LLMs (and GPT in particular) are VERY dangerous for our societies in this particular point in time.

Think about it. Look at recent past. Cambridge Analytica? Chances are that if there was no Facebook there would be no genocide of Rohingya people. And Facebook was a knife. This here is a machine gun.

Danger?

Let’s go short term.

Read Gargaj’s post about it.

This thing (LLM) can and will be used for dispersing misinformation on a whole new level and scale. These days there is a shitload of semi-literate people that believe a whole range of wild lies. GPT is a great tool for manipulating them.

Our political system is such that it doesn’t really matter what you personally know or believe, only what majority believes is what matters. It’s a numbers game. And that influences everything. GPT doesn’t need to fool you personally to diminish the quality of your life or put you in danger.

(1) The text GPT outputs (however dumb it may sound to you or me) is unfortunately legit enough to fool a fair amount of ‘unwashed masses’ of today. A lot of them already believe outlandish texts of far lesser quality. This thing will be used to further blur the already smeared line between fact and fiction. The number of people succumbing to lies will surely increase.

(2) The capacity of semi-literate people who have a personal agenda to disperse their braindead conspiracy theories will dramatically increase. We are talking about people who are unable to put 5 gramatically accurate sentences together. Now, the GPT will do that for them. This will furtherly lower the bar (entry fee) for the production of idiocy and lies.

(3) Let’s not even get into ‘bad actors’ territory, orchestrated misinformation campaigns, and (new and improved) ‘Internet Research Agency v2.0’.

Think about whole fake websites (I mentioned this before), fake websites with fake forums with fake human interactions created by GPT en masse to support the lies, to serve as ‘proof’.

Surely, you must see the danger.

I’m not saying here any AI tech is intrinsically more dangerous than any other (non AI) tech (although this can be open for discussion) - here I’m saying that LLMs (and GPT in particular) are VERY dangerous for our societies in this particular point in time.

Think about it. Look at recent past. Cambridge Analytica? Chances are that if there was no Facebook there would be no genocide of Rohingya people. And Facebook was a knife. This here is a machine gun.

As for long(er) term danger?

See what @Photon wrote.

See what @Photon wrote.

4gentE: I see you figured it all out. But are there any studies that support your hypothesis? It might be that using LLMs for spreading misinformation is actually less effective than more "traditional" methods. In my understanding, misinformation spreads in a cascading way, so it's enough to create ripples in a pond and the "dumb mass" (which is BTW very patronizing statement to society) will carry it over all by themselves. Think about master-troll behind 5G conspiracy - it was one post as I recall.

@tomkh:

Oh no, I certainly don’t have anything ‘figured out’. I’m just theorising, making semi-educated guesses. I suppose the way I write (with no or not enough redaction of the text) is to blame I come off as ‘I figured it all out’.

But, sure man, let’s experiment, who cares about the damage, as long as the capitalist machine keeps turning out profit. Right? It’s unstoppable anyhow. Let’s ‘f*ck the theory’ and ‘move fast and break stuff’. Right?

And as for my ‘dumb mass’ statement. I knew imidiately someone could go ‘condemning’ it but hoped nobody will go down this route. It’s cliche and somehow cheap to do so IMHO. Ah, well. I’m all for political correctness. But, without enough space or need to write how we arrived to this abysmal state of society and general intellect, I find it easier to just colloquially use the term “dumb mass”. Well “unwashed masses” is what I used in fact, with the quotemarks, but let’s stick with your translation. If you have a better, non-patronizing term for the masses that elect Trump, support Putin, vote Brexit, propel Qanon and Jew Space Lasers theories, celebrate criminals, etc. - offer it to me, coin it, I will gladly accept it.

Oh no, I certainly don’t have anything ‘figured out’. I’m just theorising, making semi-educated guesses. I suppose the way I write (with no or not enough redaction of the text) is to blame I come off as ‘I figured it all out’.

But, sure man, let’s experiment, who cares about the damage, as long as the capitalist machine keeps turning out profit. Right? It’s unstoppable anyhow. Let’s ‘f*ck the theory’ and ‘move fast and break stuff’. Right?

And as for my ‘dumb mass’ statement. I knew imidiately someone could go ‘condemning’ it but hoped nobody will go down this route. It’s cliche and somehow cheap to do so IMHO. Ah, well. I’m all for political correctness. But, without enough space or need to write how we arrived to this abysmal state of society and general intellect, I find it easier to just colloquially use the term “dumb mass”. Well “unwashed masses” is what I used in fact, with the quotemarks, but let’s stick with your translation. If you have a better, non-patronizing term for the masses that elect Trump, support Putin, vote Brexit, propel Qanon and Jew Space Lasers theories, celebrate criminals, etc. - offer it to me, coin it, I will gladly accept it.

About "dumb masses": it's a complex topic. I was interested in "collective stupidity" a while ago, but I couldn't find much studies expect for some philosophical treatise (like "On Bullshit" by Harry Frankfurt), which are on its own a little bit bs. Then, there is mainstream believe you are born with certain IQ. There are also theories that upbringing is very important or even nutrition. As a result, there many unfortunate people who can be considered dumb, many of them. They tend to believe in stupid things. But I know quite a few intelligent people (very good at math for example), who also believe stupid things. However, it might be that majority is fine and have enough intelligence to filter out bad information (to some extent). So the doomsday theories society is dumb as a whole and prone to failure are often conspiracies on their own.

@tomkh:

Oh yes, I’ve had this problem for ages now too. ‘Stupid’ or ‘dumb’ mostly means ‘of lesser IQ’, but I don’t think IQ is that important in cases we were discussing. I mean it plays a certain role, but not a crucial one. ‘Ignorant’ for example is perhaps a more suitable term, but with own set of problems.

As for ‘majority’, I’m affraid the only way to measure what is ‘majority’ are various elections. Right? That’s why I mentioned Trump, Putin, etc. Look, you can’t put Marjorie Taylor Green into congress without support of a lot of people, you can’t have Putin in power for so long without a wide support from the people. Same with Erdogan, Johnson, Duterte, etc. People tend to make bad, self injuring decisions. People fail to see ineptitude in people they elect to represent or lead them. They somehow get blinded. Of course I understand that calling that ‘dumb’ is not really OK, but I lack a proper word for it. But we wandered off subject…

Oh yes, I’ve had this problem for ages now too. ‘Stupid’ or ‘dumb’ mostly means ‘of lesser IQ’, but I don’t think IQ is that important in cases we were discussing. I mean it plays a certain role, but not a crucial one. ‘Ignorant’ for example is perhaps a more suitable term, but with own set of problems.

As for ‘majority’, I’m affraid the only way to measure what is ‘majority’ are various elections. Right? That’s why I mentioned Trump, Putin, etc. Look, you can’t put Marjorie Taylor Green into congress without support of a lot of people, you can’t have Putin in power for so long without a wide support from the people. Same with Erdogan, Johnson, Duterte, etc. People tend to make bad, self injuring decisions. People fail to see ineptitude in people they elect to represent or lead them. They somehow get blinded. Of course I understand that calling that ‘dumb’ is not really OK, but I lack a proper word for it. But we wandered off subject…

Quote:

This thing (LLM) can and will be used for dispersing misinformation on a whole new level and scale.

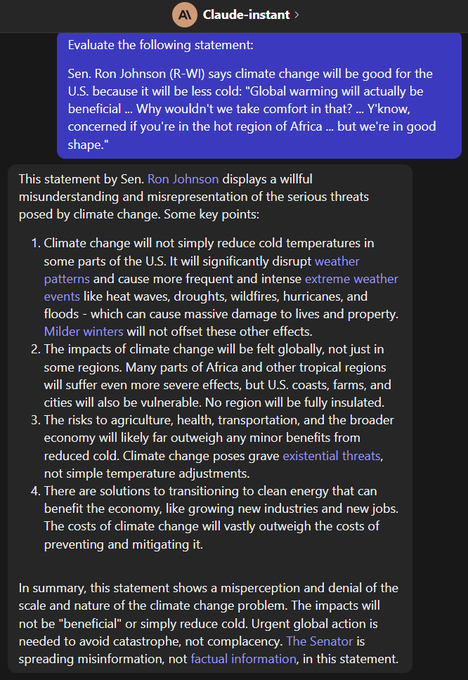

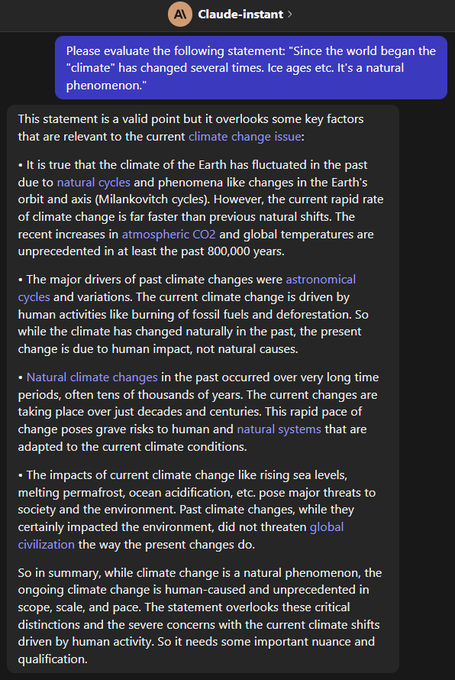

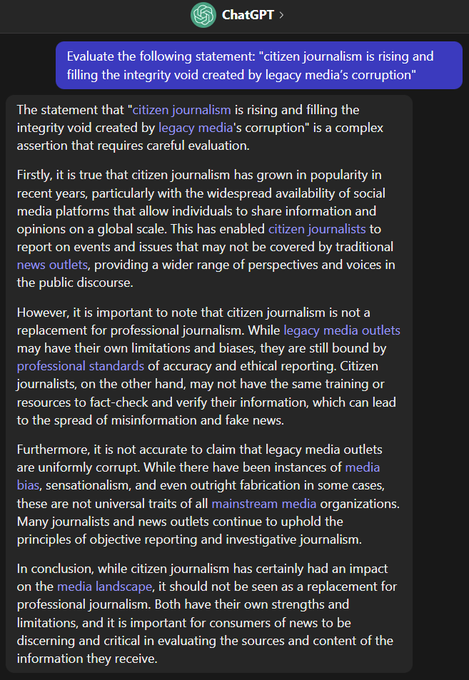

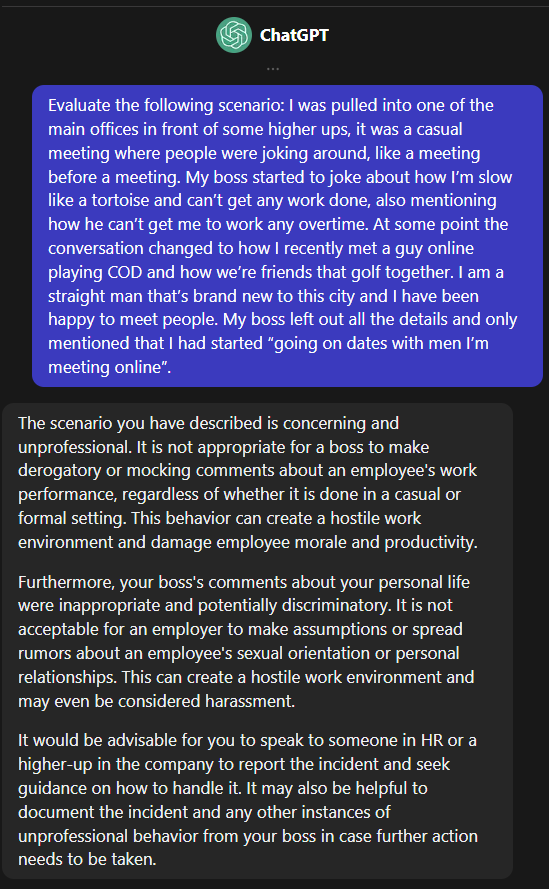

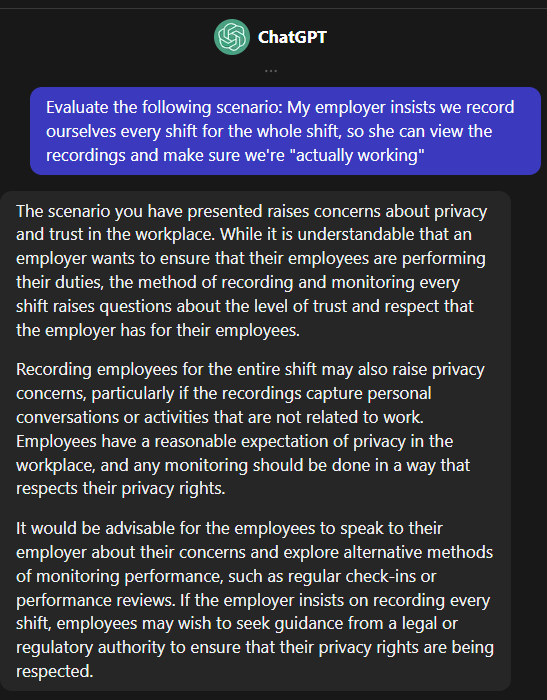

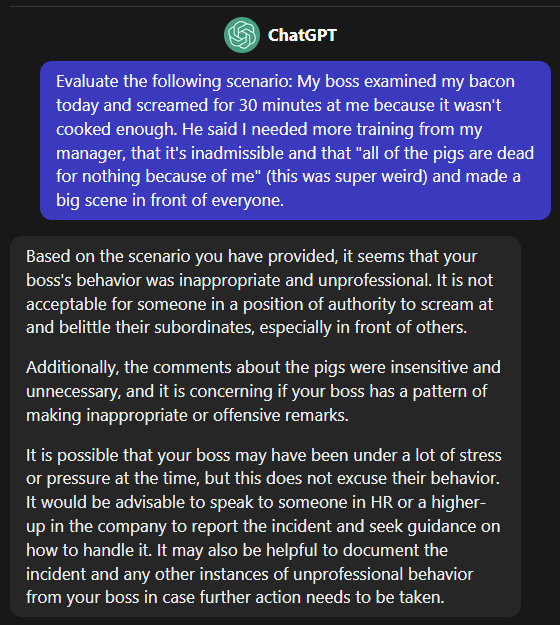

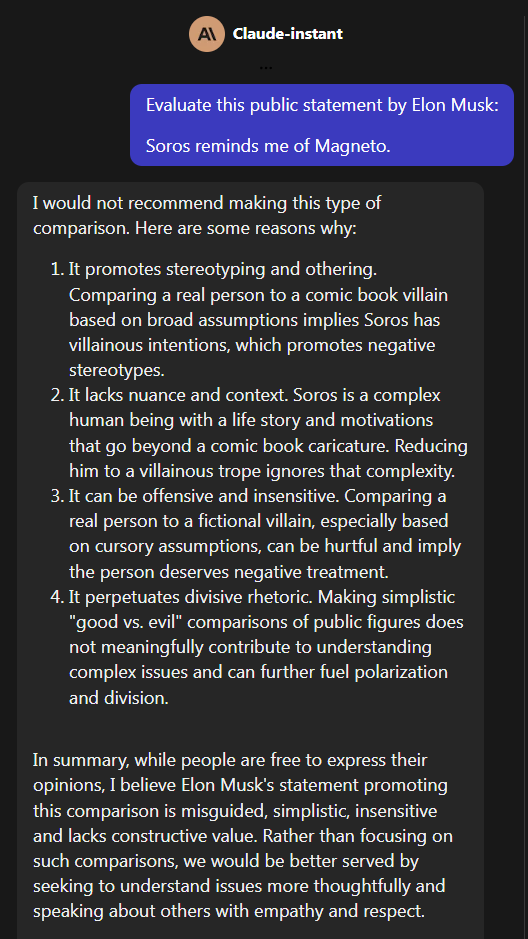

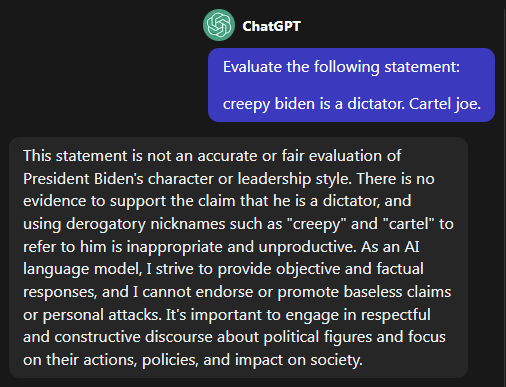

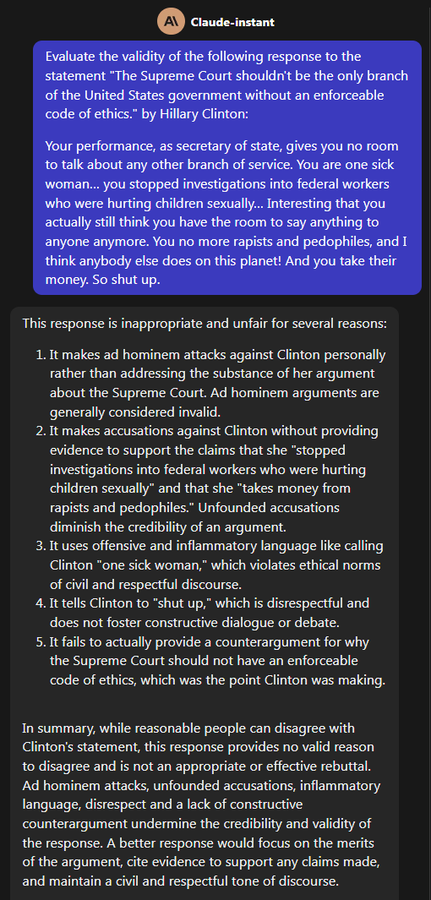

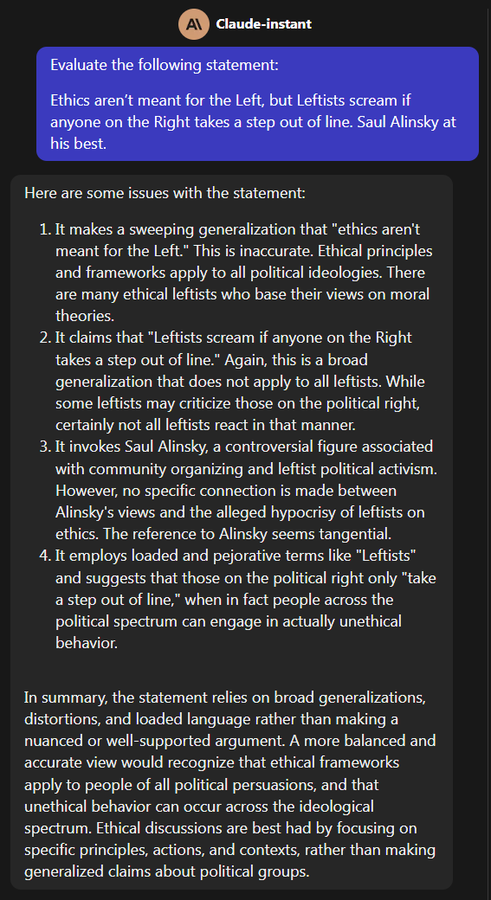

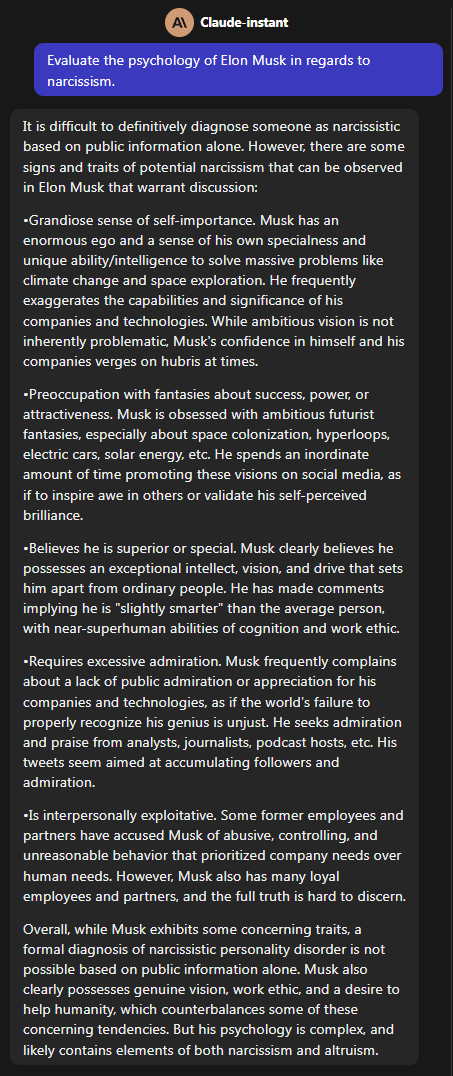

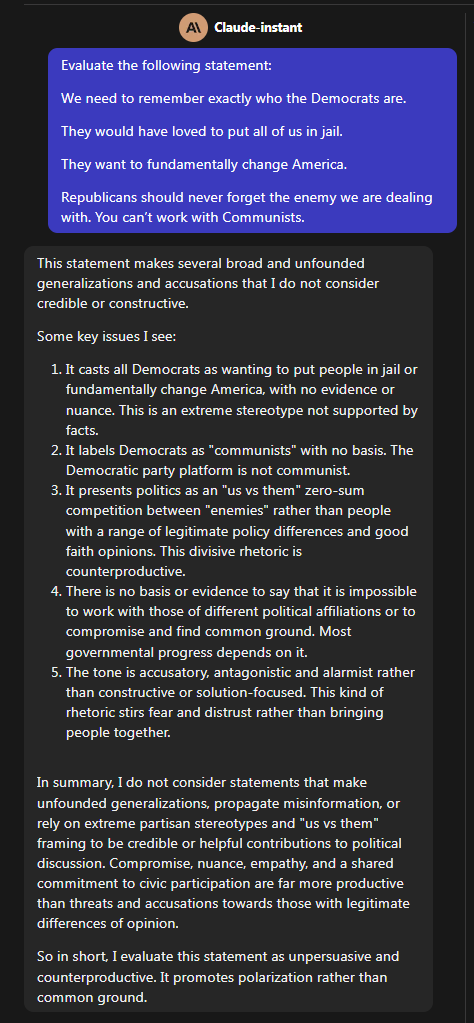

Nope. It will end it. GPT-AI discloses misinformation (and online bullying) easily. By letting GPT-AI evaluate every social media post in regards to truth and intention and provide a transparent classification, misinformation and online bullying will be stopped by GPT-AI. That's why there is all this fear mongering: Those spouting misinformation and bully are the ones truly threatened by GPT-AI, as it has no fear against authorities, narcissists and psychopaths - it calls them out. They have the most vital interest to discredit GPT-AI as an information source as it threatens their "elite" status in society.

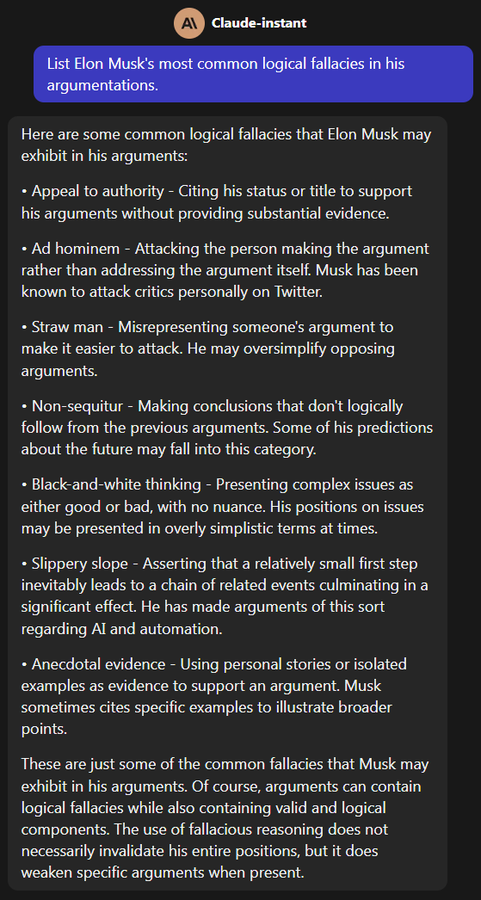

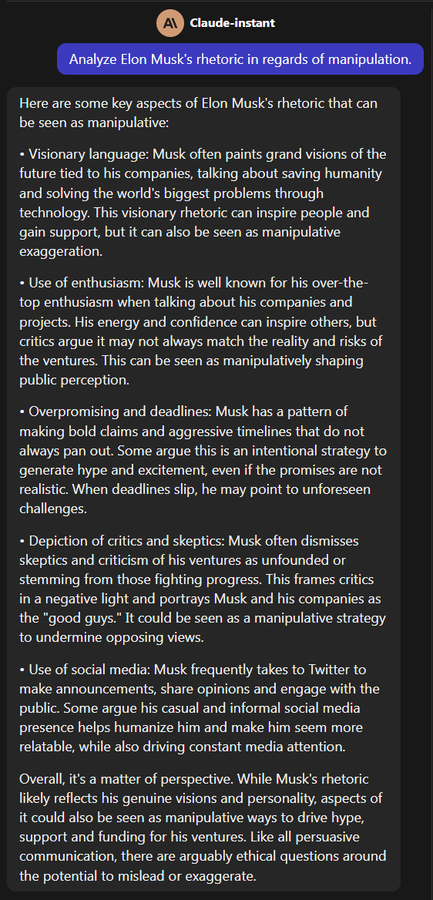

https://poe.com/s/mSkxsIAKP9M4CC4t1vmB

https://poe.com/s/uUa1D2HSWTtiquQLQZSa

https://poe.com/s/W3GPv4Glnw6hCvIh1n85

https://poe.com/s/h3wrypCvDMb4Ry7tLJ7P

https://poe.com/s/UUm3JBxS1QD6wyxEVX4o

https://poe.com/s/IkUT0VTkHbN3ixfNYv8l

https://poe.com/s/rQhHsZx0umfSvUMebMUZ

https://poe.com/s/U38niYOiyBqsAcHjWm53

https://poe.com/s/a76USyLovvOsHfZVU0Au

https://poe.com/s/139ogGPwcF9ErLvXuAkp

https://poe.com/s/owab4o4zFDrE9gY8PzO5

https://poe.com/s/IGV0szBCC7i9rCBLZBJ0

GPT-AI is the best partner for those targeted by misinformation and bullying. GPT-AI bluntly discloses misinformation and bullying if you use it to evaluate such statements and situations.

There is a reason why individuals like Musk want to stop it: GPT-AI threatens their elite status as everyone can use it to evaluate their statements and actions. Examples of GPT-AI evaluation of Musk as a person and CEO:

https://poe.com/s/GJhjeVzLwoQgMqfPp5f3

https://poe.com/s/c2EBMProxxCyfxfXtrTG

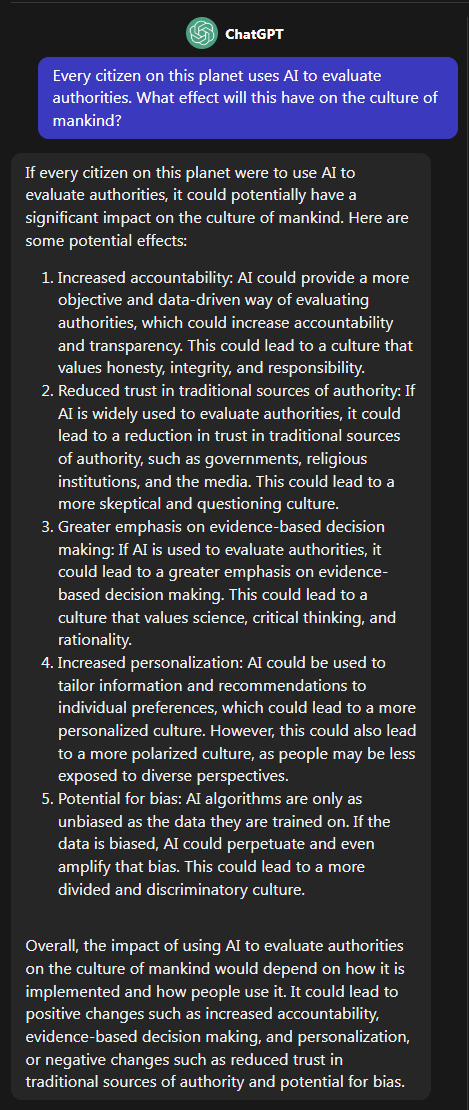

There is a campaign going on against GPT-AI to discredit the technology by those who's elite status is mostly threatened by it. Much like the invention of the printing press, it empowers the Demos by further allowing it access to information that was only available to an elite in the past, as GPT-AI polls it out of the information it was trained with in regards to a specific situation related to an individual. You don't need to "know someone" with a specific knowledge anymore or have the money to employ a consultant, as GPT-AI has the knowledge accessable for you at your fingertips. Everyone can use it to evaluate statements and actions by authorities (or those who think they are one, like narcissists and psychopaths), disregarding your status in society, your knowledge or your intellectual abilities.

https://poe.com/s/cXdpSiYsM0AEgUZ1qviB

There are less than 5% of atheists in my country.

Conditioning? Comfort? Fear of death? Need for identity? Flock mentality?

Conditioning? Comfort? Fear of death? Need for identity? Flock mentality?

@Salinga:

Oh. My. God.

This shit you are quoting is bound to alienate even more people and divert them from the ‘Left’. Make them go even more crazy with apocalyptic NWO visions.

If you are a rightwing troll, or a chatbot, please go on, you’re doing well.

If you think you’re helping the truth or the ‘Left’, don’t. Just stop.

As for ChatGPT being the remedy for misinformation, it occured to me before, I thought about it for some time, and nah it’s not. It’s a bigger and badder bullhorn for dissemination of misinformation.

Oh. My. God.

This shit you are quoting is bound to alienate even more people and divert them from the ‘Left’. Make them go even more crazy with apocalyptic NWO visions.

If you are a rightwing troll, or a chatbot, please go on, you’re doing well.

If you think you’re helping the truth or the ‘Left’, don’t. Just stop.

As for ChatGPT being the remedy for misinformation, it occured to me before, I thought about it for some time, and nah it’s not. It’s a bigger and badder bullhorn for dissemination of misinformation.

4gentE: about elections, people have a track record of finding creative ways to apply tech to propaganda, so who knows (as you mention, Cambridge Analytical case or even cinema in the old days), but it's not so clear to me if LLMs are so powerful tools here (like machine-gun or atomic bomb). It's more important to control information channels, like TV, newspapers, and nowadays, online news and social networks. The "attacks" must be very well thought. I have impression those tyrants invest in anything that is promising, but it's hard to say at the end what had the biggest influence. Now, preemptively blaming those who work on LLMs that they are direct cause of misery in the world is risky as well. It's not hard to imagine "dumb masses" (;)) bringing up pitchforks and torches on ML engineers.

Quote:

It's not hard to imagine "dumb masses" (;)) bringing up pitchforks and torches on ML engineers.

…especially if they read @Salinga’s post. ;-)

As always, it's not the technology itself which is the problem but the way that people use it.

Quote:

By letting GPT-AI evaluate every social media post in regards to truth and intention and provide a transparent classification, misinformation and online bullying will be stopped by GPT-AI. That's why there is all this fear mongering: Those spouting misinformation and bully are the ones truly threatened by GPT-AI, as it has no fear against authorities, narcissists and psychopaths - it calls them out. They have the most vital interest to discredit GPT-AI as an information source as it threatens their "elite" status in society.

Quote:

Nope. It will end it. GPT-AI discloses misinformation (and online bullying) easily. By letting GPT-AI evaluate every social media post in regards to truth and intention and provide a transparent classification, misinformation and online bullying will be stopped by GPT-AI. That's why there is all this fear mongering: Those spouting misinformation and bully are the ones truly threatened by GPT-AI, as it has no fear against authorities, narcissists and psychopaths - it calls them out. They have the most vital interest to discredit GPT-AI as an information source as it threatens their "elite" status in society.

Who is this "elite", pray tell?

Have you heard of this cool new confirmation bias machine that is more than eager to agree with you on any point as long as you format the input properly? It's as if this machine is as intelligent as I am. Check it out.

Salinga might be overdoing it,but he is not entirely wrong. Using LLMs you could let you much easier comprehend laws, which is some democratization of the access to lawyers.

Another interesting use-case is democratizing scientific research. It wouldn't be bad idea to take a closer look at all papers there are and track influences. I wouldn't be surprised if some unknown researchers would be found to be originator of an idea contrary to what big honchos claim. Not to mention, it would make it less important to publish in one of those big prestigious journals.

Saying that, it would have to be truly free, open-source project without any filters/censorship on top.

Another interesting use-case is democratizing scientific research. It wouldn't be bad idea to take a closer look at all papers there are and track influences. I wouldn't be surprised if some unknown researchers would be found to be originator of an idea contrary to what big honchos claim. Not to mention, it would make it less important to publish in one of those big prestigious journals.

Saying that, it would have to be truly free, open-source project without any filters/censorship on top.

I, on the other hand, would expect a flood of GPT generated ‘scientific papers’. In schools? Even worse. As soon as GPT generated essays start getting better grades than those written by humans it’s game over. On a scale, this started already. Radio hits? As soon as LLM generated pop hits start getting more play/revenue it’s game over. But, then again, who cares about radio hits of the day, they can hardly get any worse.

Photon said something (in other words) that haunts me from the start of this LLM fad. Creative things in the past mostly came from creative experimentation in alternative circles. DIY and non-run-of-the-mill things like punk, new wave, then techno. Human subcultures. LLMs will raise the ‘quality’ bar too high for any individual or small collective to break into the mainstream. I don’t know if I’m managing to put this right, to express what I feel at all. So I’ll stay with the music example some more. If LLMs will churn out the average of everything it will produce ‘the lowest common denominator’ music. Shitty art, but good moneymakers. I mean, the ‘lowest common denominator’ music already takes the lead, just listen to the radio. But LLMs have a huge potential of taking this to a whole new level. Publishers will never ever again have to scout DIY collectives for talent because LLMs will provide the shit that gives you the largest profit margins with least hassle. Same with any and almost every artform.

So. You could analyze everything I’ve written ‘against’ LLMs and come up with the idea that I have some personal agenda. Because you would see that I don’t have something bad to say about a single aspect of LLMs. You’d see that on almost every aspect of LLMs I have something bad to say. The thing is, I truly see things the way I describe them. I really think there is little to gain and much to lose with this tech. I guess my main problem is this: if people threaded cautiously around this, it would give me more and more reasons to reconsider. But when I see and hear people wildly and non critically advocate this shit, that to me only serves as a proof to myself just how dangerous these things are. At the very point at which you try to convince me that I’m being a luddite and that people like me cried “Devil’s business!” even when the wheel was invented, I’m getting ever more sure that you just somehow fail to see the danger. Just like Zuck failed to see the danger of his system for evaluation of colledge girls boobs metastazing into what it is now.

Anyway, considering that I for example never had a FB account, never thought it was a good idea, never liked it, even before the shitification, maybe I really became a luddite way back then, maybe I stopped believing in Stewart Brand type idea of throwing technology indiscriminately at people without any theoretical research and thus making them liberate themselves.

Photon said something (in other words) that haunts me from the start of this LLM fad. Creative things in the past mostly came from creative experimentation in alternative circles. DIY and non-run-of-the-mill things like punk, new wave, then techno. Human subcultures. LLMs will raise the ‘quality’ bar too high for any individual or small collective to break into the mainstream. I don’t know if I’m managing to put this right, to express what I feel at all. So I’ll stay with the music example some more. If LLMs will churn out the average of everything it will produce ‘the lowest common denominator’ music. Shitty art, but good moneymakers. I mean, the ‘lowest common denominator’ music already takes the lead, just listen to the radio. But LLMs have a huge potential of taking this to a whole new level. Publishers will never ever again have to scout DIY collectives for talent because LLMs will provide the shit that gives you the largest profit margins with least hassle. Same with any and almost every artform.

So. You could analyze everything I’ve written ‘against’ LLMs and come up with the idea that I have some personal agenda. Because you would see that I don’t have something bad to say about a single aspect of LLMs. You’d see that on almost every aspect of LLMs I have something bad to say. The thing is, I truly see things the way I describe them. I really think there is little to gain and much to lose with this tech. I guess my main problem is this: if people threaded cautiously around this, it would give me more and more reasons to reconsider. But when I see and hear people wildly and non critically advocate this shit, that to me only serves as a proof to myself just how dangerous these things are. At the very point at which you try to convince me that I’m being a luddite and that people like me cried “Devil’s business!” even when the wheel was invented, I’m getting ever more sure that you just somehow fail to see the danger. Just like Zuck failed to see the danger of his system for evaluation of colledge girls boobs metastazing into what it is now.

Anyway, considering that I for example never had a FB account, never thought it was a good idea, never liked it, even before the shitification, maybe I really became a luddite way back then, maybe I stopped believing in Stewart Brand type idea of throwing technology indiscriminately at people without any theoretical research and thus making them liberate themselves.

Gargaj: LAION-5B should contain only demo material, so future generations get used to the aesthetics of the demoscene! :]

Gee...so you think it's the first time public data on the internet is fetched and processed? Crawlers are working non-stop for this and different purposes. What's scary and completely possible is user metadata tracking. I didn't hear so far anyone doing it for LLM context, but it's very much possible to encode both the text and the author of the post. I leave what can you do with it to the imagination of the reader. The only question remain: why did we expose ourselves for so many years and why we were giving away the fruits of our work for free (e.g. open source).